For most of military history, wars were shaped by numbers, geography, and raw firepower. Whoever had more soldiers, more weapons, or better terrain usually held the advantage. That logic is quietly breaking down. Modern warfare is no longer defined only by tanks, jets, and missiles, but by algorithms running silently behind screens—an invisible layer of decision-making that never sleeps, never panics, and never forgets.

Artificial intelligence didn’t arrive on the battlefield with a dramatic announcement. It crept in gradually, first as software that helped analyze satellite images, then as systems that assisted targeting, logistics, and surveillance. Today, AI is no longer a support tool in the background. In many areas, it is becoming a core part of how wars are planned, fought, and even prevented.

This shift raises uncomfortable questions. Who really makes decisions in modern war? How much control do humans still have? And what happens when machines begin to act faster than humans can understand?

From Soldiers to Systems: The Quiet Transformation of War

The most important change AI has brought to warfare isn’t a new weapon—it’s a new way of thinking. Militaries are moving away from purely human-driven decision chains toward systems that process massive amounts of data and recommend actions in real time.

Modern battlefields generate overwhelming information: drone feeds, radar signals, intercepted communications, satellite imagery, cyber activity, and sensor data from land, sea, air, and space. No human team can process all of this fast enough during combat. AI can.

Instead of replacing soldiers outright, AI reshapes their role. Commanders increasingly rely on machine-generated insights to decide where to move forces, when to strike, and how to anticipate enemy behavior. War is becoming less about reaction and more about prediction.

AI in Intelligence and Surveillance: Seeing Everything, Missing Nothing

One of AI’s earliest and most powerful military uses is intelligence analysis. Surveillance used to depend heavily on human analysts reviewing photos, videos, and intercepted data manually. That approach worked, but it was slow and prone to fatigue and bias.

AI systems now scan satellite imagery to identify unusual movements, detect hidden facilities, track vehicles across vast regions, and flag changes that human eyes might miss. Facial recognition, pattern analysis, and behavioral modeling are increasingly common in intelligence operations.

Drones equipped with AI can autonomously track targets, distinguish between civilian and military activity, and follow objects across complex environments. The result is persistent surveillance—an enemy that is always watching, always recording, and always learning.

This creates a battlefield where hiding becomes harder, deception must be more sophisticated, and mistakes are exposed almost instantly.

Autonomous Weapons: When Machines Pull the Trigger

The most controversial use of AI in warfare is autonomous weapons—systems capable of selecting and engaging targets without direct human control. These are often described as “killer robots,” though the reality is more complex and far less cinematic.

Some existing systems already operate with limited autonomy, such as missile defense platforms that react faster than human operators could. Loitering munitions, sometimes called “suicide drones,” can patrol an area, identify targets, and strike with minimal human input.

Supporters argue that AI-driven weapons can reduce human error, react faster than opponents, and potentially lower civilian casualties by improving precision. Critics warn that removing humans from life-and-death decisions crosses a moral line and creates serious risks of escalation, malfunction, or misuse.

Once weapons operate at machine speed, humans may no longer be able to intervene in time to stop unintended consequences.

Decision Speed: War at the Pace of Algorithms

AI doesn’t just change weapons—it changes time itself. Decisions that once took minutes or hours can now be made in seconds. This compression of time alters the entire nature of conflict.

In traditional warfare, commanders had space to deliberate, communicate, and reassess. AI-driven systems push toward near-instant responses, especially in areas like missile defense, cyber operations, and electronic warfare.

The danger is that faster decisions leave less room for judgment. An AI system might interpret ambiguous data as a threat and trigger a response before humans fully understand the situation. In high-stakes environments, that speed could turn misunderstandings into irreversible actions.

Modern warfare increasingly risks becoming a contest of reaction times rather than strategy.

AI and Cyber Warfare: The Invisible Front Line

Unlike tanks or aircraft, cyber weapons leave no smoke trails. AI has transformed cyber warfare into a constantly evolving battle fought across networks, infrastructure, and digital systems.

AI can automatically scan for vulnerabilities, launch adaptive attacks, and respond to intrusions faster than human teams. Defensive systems powered by AI monitor networks in real time, identifying anomalies and isolating threats before damage spreads.

This creates an arms race where attackers and defenders continuously train their algorithms against each other. Cyber conflict rarely makes headlines, but it quietly shapes the balance of power, disrupting economies, communications, and even military command systems.

In future conflicts, the first battles may occur entirely in cyberspace, long before the first shot is fired.

Logistics and Supply Chains: War Runs on Data

Wars are won not only by firepower, but by logistics. AI plays a growing role in managing supply chains, predicting equipment failures, optimizing transport routes, and ensuring forces receive what they need at the right time.

Predictive maintenance systems use AI to analyze sensor data from vehicles, aircraft, and weapons, identifying problems before breakdowns occur. This reduces downtime and extends the lifespan of expensive military assets.

In large-scale conflicts, logistics often determines success more than tactics. AI gives militaries a quieter but decisive advantage by making their operations more efficient, resilient, and adaptive.

Human Control vs Machine Logic: Who Is Really in Charge?

As AI systems become more capable, a central tension grows louder: how much authority should machines have in war? Militaries insist that humans remain “in the loop” for critical decisions, especially those involving lethal force. In practice, that line is becoming blurry.

When AI systems generate recommendations faster than humans can analyze alternatives, rejecting the machine’s suggestion becomes psychologically and operational difficult. Over time, commanders may default to trusting the system—not because it’s perfect, but because it’s efficient.

This creates a subtle shift of power. The human role moves from decision-maker to supervisor, responsible for approving actions already framed by algorithms. Accountability becomes complicated. If an AI-assisted decision leads to disaster, who is responsible—the operator, the commander, the programmer, or the system itself?

These questions remain largely unanswered.

The Ethics of AI Warfare: Lines That Keep Moving

Ethical debates about AI in warfare are no longer theoretical. International organizations, human rights groups, and military planners are actively arguing over limits, bans, and regulations.

One concern is dehumanization. War is already brutal, but AI risks creating emotional distance between those who initiate violence and those who suffer from it. When decisions are reduced to data points and probabilities, the human cost can fade into abstraction.

Another issue is bias. AI systems are trained on data, and data reflects human assumptions, priorities, and blind spots. A biased dataset can produce biased targeting, misclassification, or disproportionate harm, especially in complex civilian environments.

Unlike traditional weapons, AI systems evolve over time. An algorithm that behaves predictably today may adapt in unexpected ways tomorrow, making oversight even harder.

Global AI Arms Race: Everyone Is Watching Everyone

No major military power wants to be left behind. The result is a global race to integrate AI into every aspect of warfare. The United States, China, Russia, and other nations are investing heavily in AI research, autonomous systems, and data-driven military platforms.

This competition creates a paradox. Even if leaders recognize the risks, slowing down feels dangerous. If one nation restrains itself while another pushes forward, the balance of power could shift quickly.

Unlike nuclear weapons, AI technology is widely accessible. Much of the underlying research comes from civilian sectors—universities, startups, and commercial labs. That makes containment far more difficult and increases the risk of proliferation to smaller states or non-state actors.

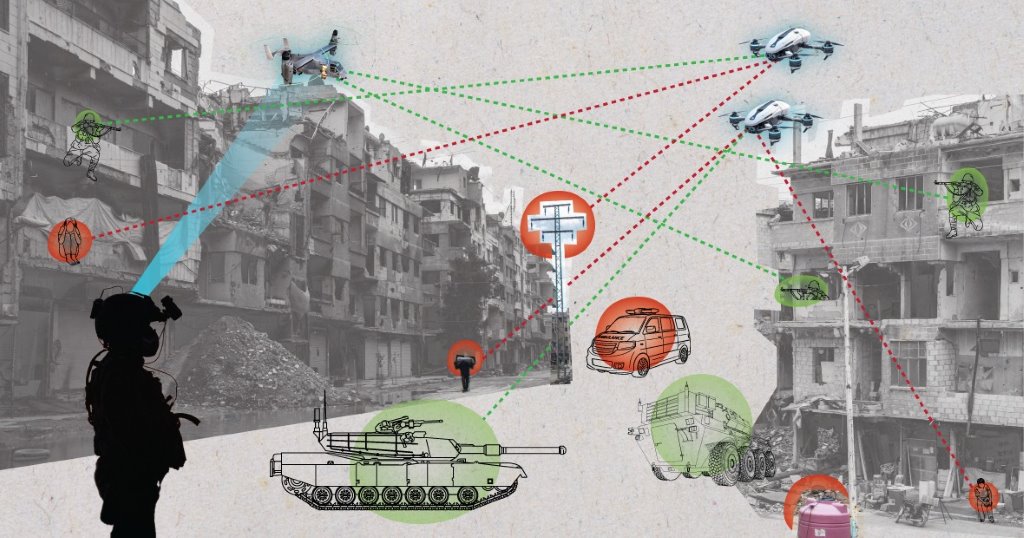

AI in Urban Warfare: Fighting in Human Environments

Modern conflicts increasingly take place in cities, where civilians, infrastructure, and combatants are deeply intertwined. AI is often promoted as a solution to the complexity of urban warfare.

Advanced recognition systems can analyze movement patterns, identify potential threats, and assist in distinguishing between civilian and military activity. In theory, this could reduce collateral damage.

In reality, cities are chaotic. People behave unpredictably, and context changes rapidly. An AI trained in one environment may fail in another. Mistakes in urban settings carry immediate and visible human consequences.

The promise of cleaner, more precise warfare clashes with the reality of messy human environments.

Psychological Warfare and Information Control

AI isn’t only changing how wars are fought physically—it’s reshaping how they’re perceived. Information warfare has become a battlefield of its own.

AI systems can generate propaganda, fake videos, deepfake speeches, and automated social media campaigns at scale. These tools blur the line between truth and manipulation, undermining public trust and shaping narratives in real time.

Military and political actors can use AI to influence enemy populations, disrupt morale, and confuse decision-makers without firing a shot. Defending against these tactics is difficult, especially in open societies where information flows freely.

In future conflicts, controlling perception may matter as much as controlling territory.

Smaller Forces, Bigger Impact

One unexpected effect of AI is that it empowers smaller militaries and even non-state actors. Autonomous drones, AI-assisted targeting, and cyber tools reduce the advantage traditionally held by large armies.

A small group with access to AI-enhanced systems can punch far above its weight, striking with precision, speed, and anonymity. This shifts global power dynamics and makes conflicts harder to predict and contain.

As technology lowers the barrier to entry, warfare becomes more decentralized—and more unstable.

The Risk of Accidental War

Perhaps the most serious danger of AI-driven warfare isn’t deliberate aggression, but misunderstanding. When autonomous systems interact—monitoring, reacting, counter-reacting—small errors can cascade quickly.

An AI system might misinterpret a routine exercise as a hostile act. Another system responds automatically. Humans step in too late. Escalation happens without clear intent.

History shows that near-misses often prevented disasters because humans hesitated. Machines may not.

What War Might Look Like in the Near Future

The future battlefield will likely be quieter, faster, and less visible to the public. Conflicts may unfold through cyber disruptions, drone swarms, economic pressure, and information manipulation long before conventional fighting begins.

Human soldiers will still matter, but they will operate alongside intelligent systems that shape their choices and constraints. Victory may depend less on bravery and more on data quality, algorithm design, and system integration.

In this environment, superiority is measured not just by weapons, but by intelligence itself.

Where This Leaves Modern Warfare

Artificial intelligence is not a distant threat or a speculative concept. It is already embedded in how modern militaries think, plan, and act. The real question is not whether AI will change warfare—it already has—but whether humans can shape that change responsibly.

The challenge ahead is finding a balance between speed and judgment, efficiency and ethics, automation and accountability. Wars may always involve violence, but the way decisions are made still matters.

As AI continues to evolve, the battlefield becomes not just a test of force, but a test of restraint.